The secret behind the Tesla Dojo

At Hot Chips 34, a recent semiconductor industry conference, Tesla demonstrated the microarchitecture of the Dojo supercomputer. The next topic was packaging components into larger systems. Tesla has its hardware and even its transport protocol. Tesla's goal is to build an artificial intelligence supercomputer to meet its serious video AI needs.

Tesla V1 Dojo Interface Processor

Tesla needs a lot of computing power to enable self-driving cars, trucks, and other vehicles to function on their own. Since Tesla needs to process a lot of video data, it is more challenging than just viewing text or still images.

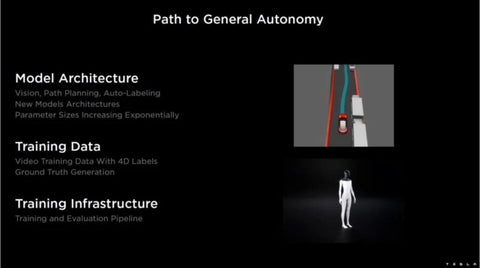

Tesla Dojo System's Path to Universal Autopilot

Tesla Dojo supercomputer-based system-on-a-wafer solution. Just seen is the D1 computing die: the Tesla Dojo AI system microarchitecture. All D1 die are integrated on a single block with 25 dies and 15kw. In addition to the 25 D1 dies, there are 40 smaller I/O dies.

Extension of Tesla Dojo System Technical Support

The training block integrates all power and cooling systems.

Tesla Dojo System Training Block

These training blocks can then be scaled by a 9TB/s link between blocks. These blocks are designed to be simply plugged in without the need for their servers.

Tesla Dojo System Training Block Composition

The Dojo Supercomputer V1 interface processor is a PCIe card with high bandwidth memory.

It can be in a server or standalone. These cards utilize Tesla's TTP interface with huge bandwidth.

Tesla Dojo System Tesla V1 Dojo Interface Processor

In the first generation, up to five cards were used in PCIe host servers, providing up to 4.5TB/s of bandwidth for training blocks.

Tesla Dojo Systems Tesla V1 Dojo Interface Processor PCIe Topology

TTP is the Tesla Transport Protocol, but Tesla is also using Ethernet. The software can see a uniform address space throughout the system. The interface card can also bridge to standard Ethernet.

Tesla Dojo System Tesla Transmission Protocol

PoE can convert standard Ethernet to a Z-plane topology.

Tesla Dojo System Tesla V1 Dojo Interface Processor Z-plane Topology

For example, using a 2D mesh, it may take 30 jumps ...... to be able to convert to the Z-plane and pass only 4 jumps.

Tesla Dojo System Tesla V1 Dojo Interface Processor Z-plane Topology 2

Tesla Dojo System Tesla V1 Dojo Interface Processor Z-plane Topology 3

Dojo also allows for remote direct data access via TTPoE.

Tesla Dojo System Tesla V1 Dojo Network Card

This is the topology.

Tesla Dojo System Tesla Remote Direct Data Access

Here is the current shape of the V1 Dojo training matrix. With the tens of billions of subsolutions, it can scale to 3000 gas pedals.

Tesla Dojo System Tesla V1 Dojo Training Matrix

Designed to be a decomposable, scalable system so that devices (such as gas pedals or HBM hosts) can be added independently.

Tesla Dojo system calculates memory IO

On the software side, the entire system can be considered as a whole. On the static random access memory side, Tesla says that most of its work is done entirely in static random access memory.

Tesla Dojo System Model Implementation

Tesla's presentation goes into the various steps of the model calculation process, but let's move to a higher level of challenge.

Tesla Dojo System End-to-End Training Process

Training requires loading data. Tesla's data is usually video, so data loading becomes a challenge.

Tesla Dojo System End-to-End Training Workflow - Video-Based Training

These are the two internal models of Tesla. Both can be handled by a single host.

Tesla Dojo System End-to-End Training Workflow - Data Loading Requirements1

Other networks can be data load-constrained, such as the third model, and cannot be handled by a single host.

Tesla Dojo System End-to-End Training Workflow - Data Loading Requirements 2

So the host layer can be broken down so that Tesla can add more processing for data loading/video processing, etc. Each has its own NIC.

Tesla Dojo System End-to-End Training Workflow - Decomposing Data Loading

This is an example of slicing input data for data loading across multiple hosts.

Tesla Dojo System End-to-End Training Workflow - Decomposing Data Loading 2

Since the models use different amounts of resources, Tesla's system allows for adding resources to more subdivided models.

Tesla Dojo System End-to-End Training Workflow - Breakdown Resources

Tesla has 120 blocks per Exapod and plans to expand the size of the Exapod.

Postscript

It takes a lot of resources behind building an AI cluster. However, it is not easy for Tesla to demonstrate its custom hardware. Companies like Amazon, Apple and Google rarely do.

While these companies are building their own chips, Tesla is trying to lead the industry and win market share. Our sense is that this demo is designed to attract engineer talent to Tesla, since Tesla doesn't seem to have any intention of selling the Dojo.